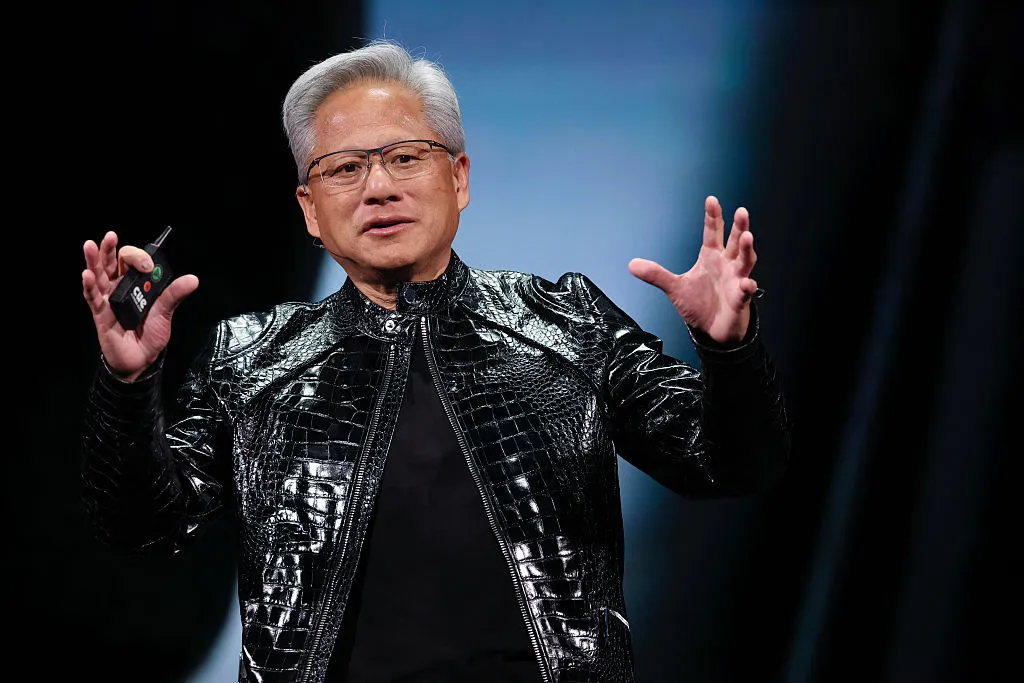

Jensen Huang Shakes Vegas With Nvidia’s Physical A.I. Vision at CES

Jensen Huang opened CES 2026 with a 90-minute keynote on Nvidia’s latest innovations. Patrick T. Fallon / AFP via Getty Images

Nvidia CEO Jensen Huang is the biggest celebrity in Las Vegas this week. His CES keynote at the Fontainebleau Resort proved harder to get into than any sold-out Vegas shows. Journalists who cleared their schedules for the event waited for hours outside the 3,600-seat BleauLive Theatre. Many who arrived on time—after navigating the sprawling maze of conference venues and, in some cases, flying in from overseas to see the tech king of the moment—were turned away due to overcapacity and redirected to a watch party outside, where some 2,000 attendees gathered in a mix of frustration and reverence.

Shortly after 1 p.m., Huang jogged onto the stage, wearing a glistening, embossed black leather jacket, and wished the crowd a happy New Year. He opened with a brisk history of A.I., tracing the last few years of exponential progress—from the rise of large language models to OpenAI’s advances in reasoning systems and the explosion of so-called agentic A.I. All of it built toward the theme that dominated the bulk of his 90-minute presentation: physical A.I.

Physical A.I. is a concept that has gained momentum among leading researchers over the past year. The goal is to train A.I. systems to understand the intuitive rules humans take for granted—such as gravity, causality, motion and object permanence—so machines can reason about and safely interact with real environments.

Nvidia enters the self-driving race

Huang unveiled Alpamayo, a world foundational model designed to power autonomous driving. He called it “the world’s first reasoning autonomous driving A.I.”

To demonstrate, Nvidia played a one-shot video of a Mercedes vehicle equipped with Alpamayo navigating busy downtown San Francisco traffic. The car executed turns, stopped for lights and vehicles, yielded to pedestrians and changed lanes. A human driver sat behind the wheel throughout the drive but did not intervene.

One particularly interesting thing Huang discussed was how Nvidia trains physical A.I. systems—a fundamentally different challenge from training language models. Large language models learn from text, of which humanity has produced enormous quantities. But how do you teach an A.I. Newton’s second law of motion?

“Where does that data come from?” Huang asked. “Instead of languages—because we created a bunch of text that we consider ground truths that A.I. can learn from—how do we teach an A.I. the ground truths of physics? There are lots and lots of videos, but it’s hardly enough to capture the diversity of interactions we need.”

Nvidia’s answer is synthetic data: information generated by A.I. systems based on samples of real-world data. In the case of Alpamayo, another Nvidia world model—called Cosmos—uses limited real-world inputs to generate far more complex, physically plausible videos. A basic traffic scenario becomes a series of realistic camera views of cars interacting on crowded streets. A still image of a robot and vegetables turns into a dynamic kitchen scene. Even a text prompt can be transformed into a video with physically accurate motion.

Nvidia said the first fleet of Alpamayo-powered robotaxis, built in the 2025 Mercedes-Benz CLA vehicles, is slated to launch in the U.S. in the first quarter, followed by Europe in the second quarter and Asia later in 2026.

For now, Alpamayo remains a Level 2 autonomous driving system—similar to Tesla’s Full Self-Driving—which requires a human driver to remain attentive behind the wheel at all times. Nvidia’s longer-term goal is Level 4 autonomy, where vehicles can operate without human supervision in specific, constrained environments. That’s one step below full autonomy, or Level 5.

“The ChatGPT moment for physical A.I. is nearly here,” Huang said in a voiceover accompanying one of the videos shown during the keynote.